Ruigang (Ray) Wang

Postdoctoral Researcher, ruigang.wang@sydney.edu.au

My research focuses on fundamental connections between control theory and machine learning:

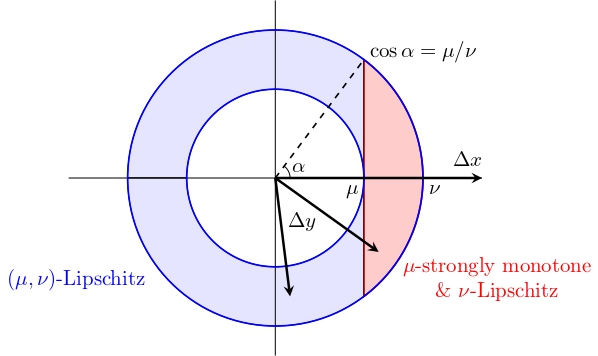

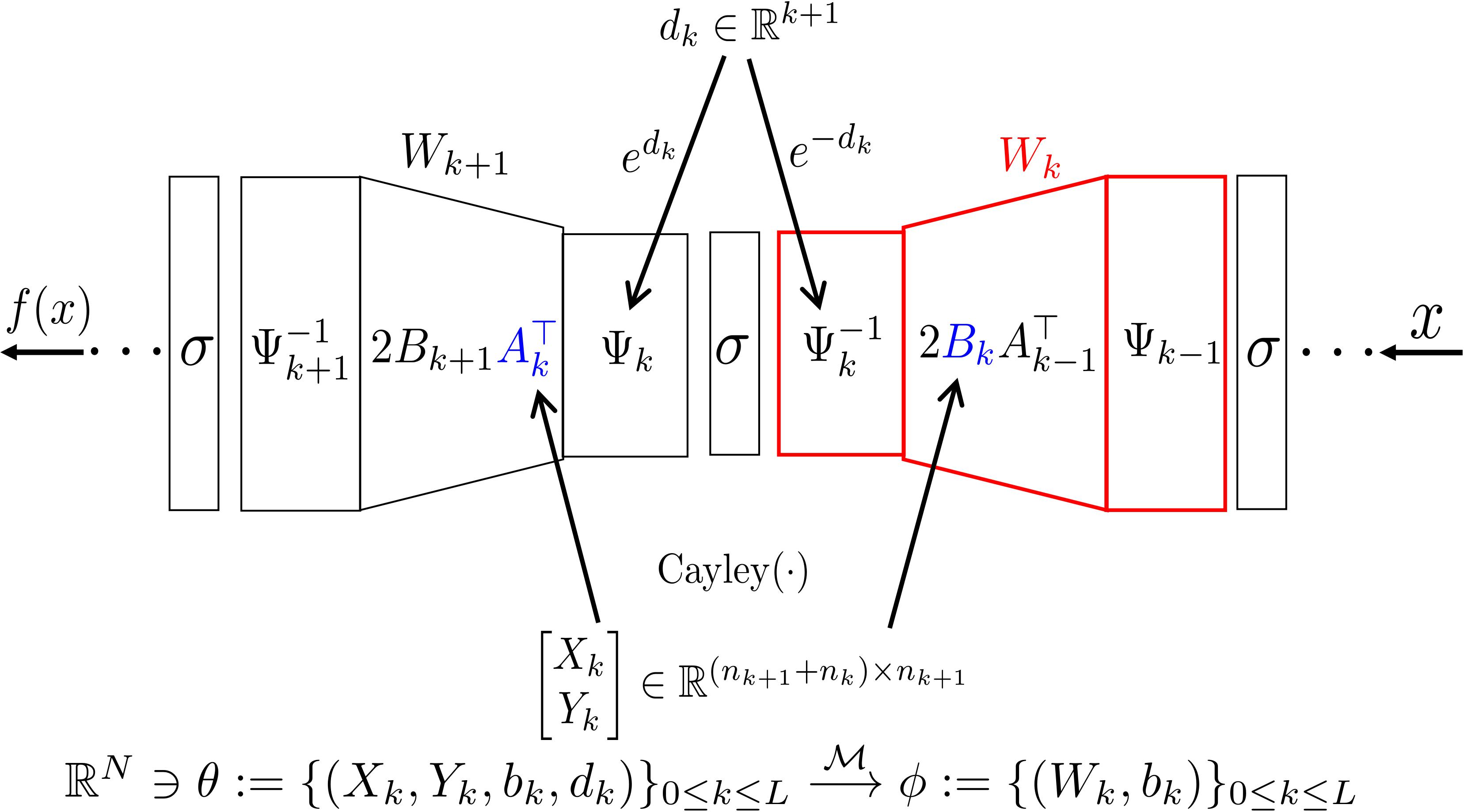

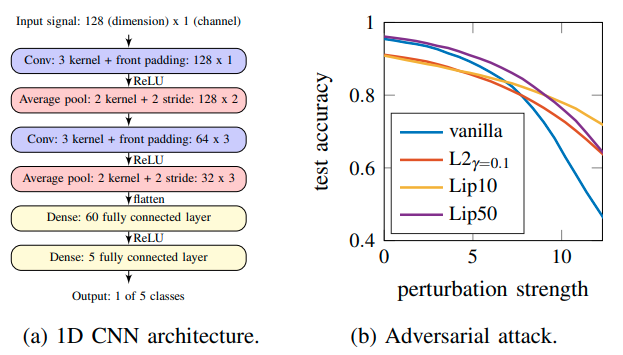

- 1. Control theoretic tools for certified robustness of deep neural networks;

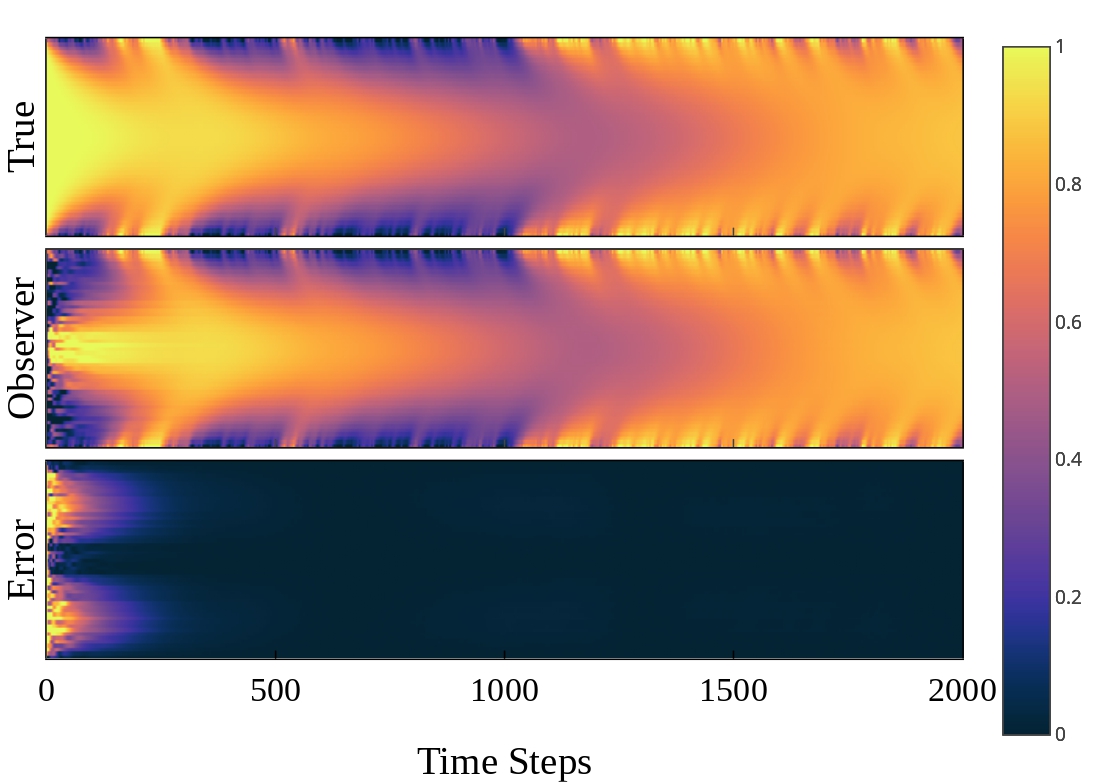

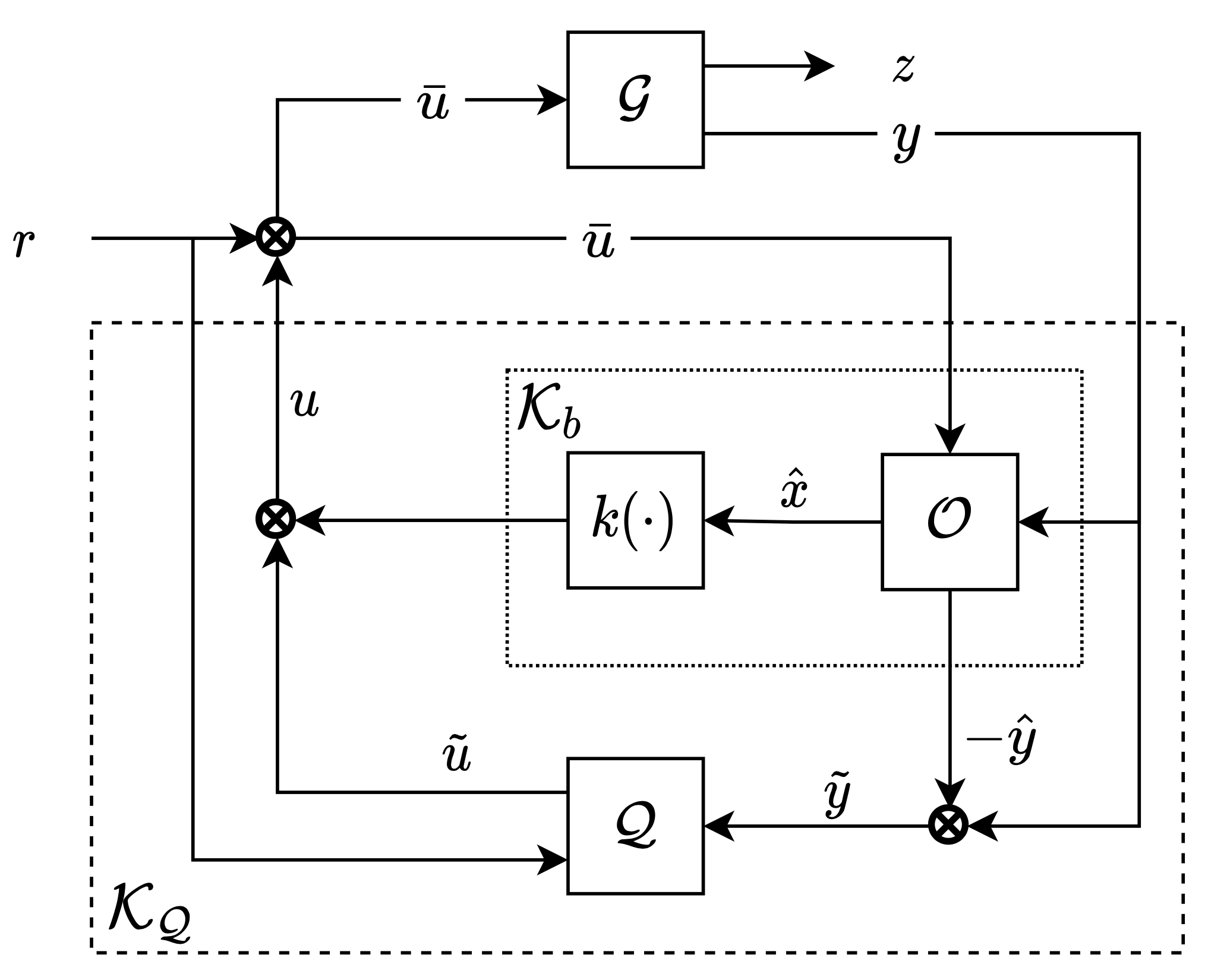

- 2. Trustworthy ML for complex system modeling, estimation, control and decision making;

- 3. Safety-critical applications such as human-robot interaction.